Workforce Programs as Centers of Quality Learning

Workforce programs have long been acknowledged as centers of innovation on every community college campus, and now a new evaluation system can help college workforce leaders demonstrate the learning quality of their instructional programs.

The Workforce Education Implementation Evaluation (WEIE) framework, developed by SRI International in Menlo Park, California, is based on a six-year case study supported by the National Science Foundation of five workforce education programs across the United States. It features tools and concepts that make it possible for program leaders to assess the hard-to-measure aspects of quality workforce instruction.

The full WEIE framework includes three main components: labor market assessment tools, partnership management tools, and instructional quality tools. This abstract discusses the instructional quality tools; SRI’s website provides information on all three tools.

The Four Quality Indicators and the Workforce Preparedness Quality Continuum

Literature on technician education, adult learning, and training describes the features of high-quality workforce instruction. As colleges focus more on providing students with clear pathways through higher education and into the workplace, such qualities are increasingly desired in academic coursework as well as in workforce coursework.

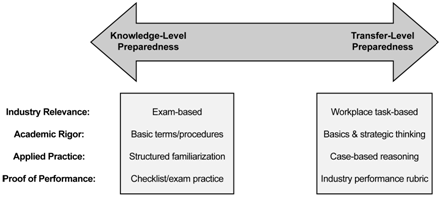

Workforce educators design their instructional programs by focusing not only on what students should know, but also on what they should be able to do in specific workplace contexts. Doing so effectively sets up a continuum of workforce preparedness quality, ranging from knowledge-level preparedness to transfer-level preparedness.

Figure 1: Four Quality Indicators

As can be seen in Figure 1, the WEIE quality of instruction concept focuses on four instructional implementation quality indicators: industry relevance, academic rigor, applied practice, and proof of performance. Each of these four quality indicators can be checked using the WEIE tools.

How the WEIE Works: Intended and Enacted Curriculum

The WEIE tools permit program leaders to check both the intended and enacted curriculum according to the four quality indicators. Intended performance is measured through reflective interview prompts with both leaders and instructors in the instructional program. These interviews ask them to broadly describe the primary goals of their program, the most critical topics and lesson content addressed in the program, and the key reasons they have for choosing particular instructional activities. Their answers help to conceptually position the overall program’s intended goals along the workforce preparedness quality continuum.

Once the intended performance is documented, data on the enacted performance are collected. This may be done either through a structured review of selected course materials, a few class observations, or both. In deciding which course materials and classes to include in the evaluation sample, the focus should be on the courses and the lessons in those courses that are considered critical for student learning success. It is also useful to select a sample representing beginning, intermediate, and advanced courses.

Once the sample is selected, a team of peers or external evaluators can be trained to rate materials and classroom activities objectively using the WEIE tools.

About two to three hours are required to review a sample of three lesson materials to prepare up to six expert reviewers who rate the course materials by working in pairs. To develop an expert panel for the WEIE, a group of industry professionals and community college workforce educators was assembled. The mix of industry and educational perspectives is helpful. Using six reviewers provides some variety in perspective while controlling the process of consensus building needed to develop inter-rater agreement of at least 75 percent on all key ratings items. One way to simplify this process is to deliver the ratings surveys online and piping the ratings into an Excel spreadsheet. This automated approach permits instantaneous results after each of each of the three rounds of review, and this permits disagreements to be addressed and coding rules clarified. Once agreement is obtained, each pair of raters may then review additional lessons independently. According to the program’s needs, some or all of the items in the rating rubric may be used. For example, a program may want to focus on items addressing academic rigor, or it may want to focus on items addressing opportunities for applied practice. A corpus of at least four sets of lesson materials per course is recommended (e.g., 12 total lessons), but more may be included if there’s time and need.

For classroom observation, about one-two hours are needed to teach observers about the concepts of depth of learning and problem complexity, and about how to code and tally the time spent on instructor-led and student-led activities. Observations should focus, if possible, on lessons associated with those lesson materials that have been rated. Doing so facilitates review of the interplay between printed curriculum and instructional methods.

The WEIE also includes tools for surveying and interviewing students. The interviews involve collecting some samples of graded student work and discussing with the student what was effective and ineffective for learning with course materials.

Illuminating Results From the WEIE Evaluation

The results can be illuminating. For example, applying the WEIE instructional quality evaluation framework to two contrasting workforce programs—one created to meet the needs of a large-scale industry partnership and one created to provide outreach to employers through a periodic advisory panel—showed the relative instructional strengths and weaknesses of both.

According to the WEIE, the large-scale partnership case exceled in industry relevance through integrated earn-while-you-learn coursework and internships, and through a factory-located classroom. It exceled in providing applied practice opportunities because student-led activities accounted for about 50 percent of the course time in beginning classes; moreover, in advanced courses, student-led activities were based on relatively complex problems, and students were observed to engage in strategic and extended reasoning, not just in recalling facts. These practices were functioning at the transfer-level of the workforce preparedness quality continuum.

The employer outreach program scored in the middle of the continuum for industry relevance and applied practice, but scored at the transfer-level of preparedness for its strong academic rigor. By requiring students to explain decisions they made based on scientific and mathematical concepts in hands-on labs, this program showed it was building their ability to be strong, flexible lifelong learners who could adapt to changes in the labor market.

A Closer Look at Problem Complexity

It is helpful to compare the complexity of problems and the depth of learning opportunity offered in beginning and more advanced courses. This kind of analysis can help educators figure out if they are keeping students adequately engaged and challenged at each level; it is also helpful in designing courses of maximum complexity and depth by the time students reach advanced courses. A comparison showed that the large-scale partnership course achieved transfer-level implementation for these factors through the advanced course, whereas the employer-outreach advanced courses achieved a mixed level of implementation. The point of this comparison is not to favor the large-scale partnership over the employer outreach, but rather to show how the WEIE can provide useful data to program leaders to help them refine their program goals.

Table 1: Implementation Placement

|

Program |

Knowledge-Focused Implementation Placement |

Mixed Implementation Placement |

Transfer-Focused Implementation Placement |

|

Large-scale partnership |

Basic problem complexity in beginning courses

|

Adequate deep learning opportunities in beginning courses |

High problem complexity in advanced courses

Many deep learning opportunities in advanced courses |

|

Employer outreach |

Basic problem complexity in beginning courses

Few deep learning opportunities in beginning courses |

Medium problem complexity in advanced courses

Adequate deep learning opportunities in advanced courses |

|

The Nuances of Student Perspectives

Sometimes the WEIE revealed nuances that can make a big difference for student engagement. In the large-scale partnership program, review of materials showed that instructors were highly selective about the textbook readings they asked students to complete. Class observations showed that instructors provided a targeted review and demonstration of how key concepts worked. Students reported appreciating these approaches.

In contrast, the employer outreach program required students to read an average of 46 pages for each lesson, with each lesson covering an average of about 38 new terms and concepts. Students were also required to take online quizzes after their readings on a small sample of those terms and concepts. About half the students reported in surveys that the reading load was challenging. The classroom activities were based on PowerPoint presentations provided by an industry vendor, with a short step-by-step lab to close the class. In interviews, several students said they often felt they had to rely on each other and avoid bothering the instructor to complete lab tasks.

The Challenge of Classroom Assessment

The WEIE instructional evaluation also revealed the challenges that instructors face in regard to classroom assessment. Even though both programs featured innovative instructional materials and methods to support learning, neither had particularly effective ways of measuring student performance. For example, hands-on lab performance was usually rated according to whether the students completed the task. There was no observed effort to provide more detailed feedback on the degree of knowledge and skill proficiency involved in the hands-on work.

Expert reviewers wanted both programs to use more situational judgment tasks to assess how well students could reason through real-world problems with their newly acquired knowledge and skills. These tasks were particularly desired for such professional skills as teamwork and conflict resolution. One instructor in the large-scale partnership program had developed a paper self-assessment that introduced students to the types of challenging interactions they might encounter with a supervisor or coworker, and then checked on the types of student responses. This kind of assessment provides a way to engage students in developing professional knowledge and skills.

WEIE Community College Uses

Developed by researchers, the WEIE’s instructional assessment tools can be used and adapted by community college practitioners. Potential uses include, among others, faculty meetings to select externally created textbooks and assessment materials, and periodic college program reviews for accreditation purposes. Workforce educators may want to use the tools as part of industry advisory panel meetings or to guide a working collaboration with employers around lesson design.

Louise Yarnall is a Senior Research Social Scientist at the Center for Technology in Learning, Education Division, SRI International.

Opinions expressed in Innovation Showcase are those of the author(s) and do not necessarily reflect those of the League for Innovation in the Community College.